Key Insights:

|

|

|

|

Meta’s recent changes to its content moderation and fact-checking policies, which are likely to increase harmful content including false information on its platforms, highlight some key implications for investors to consider. These include broad societal risks with related regulatory risks, and exposure to financial materiality risks with impacts on advertising spending.

Meta, the owner of social media platforms Facebook, Instagram and Threads, announced changes to its content moderation policies in the US on January 7. It is transitioning its fact-checking program toward a “community notes” approach (a user-generated system) and removing some restrictions on hate speech rules covering immigration and gender. Also, it will no longer demote any content that it has fact-checked and deemed to be false and will opt for a much less obtrusive label to replace its current full screen warning. Below we outline the possible impact of these changes, highlighting key investment risk considerations.

Risk 1: Societal Risks of Increased Misinformation and Disinformation

Meta’s content moderation changes are likely to increase the societal risks associated with false information, as these updates are expected to facilitate the spread of such information on its platforms. Globally, false information has been linked to increasing doubt in factual evidence, making accurate information assessment more difficult, fueling polarization, and undermining public trust in democratic institutions, among other societal risks. For a second year in a row, the World Economic Forum’s 2025 Global Risks Report1 cited misinformation and disinformation (i.e., the spread of false or misleading information), as the highest short-term global risk.

Table 1. Global Risks Ranked by Severity Over the Short and Long Term

| Rank | 2 years | 10 years |

| 1st | Misinformation and disinformation | Extreme weather events |

| 2nd | Extreme weather events | Biodiversity loss and ecosystem collapse |

| 3rd | State-based armed conflict | Critical change to Earth systems |

| 4th | Societal polarization | Natural resource shortages |

| 5th | Cyber espionage and warfare | Misinformation and disinformation |

| 6th | Pollution | Adverse outcomes of AI technologies |

| 7th | Inequality | Inequality |

| 8th | Involuntary migration or displacement | Societal polarization |

| 9th | Geoeconomic confrontation | Cyber espionage and warfare |

| 10th | Erosion of human rights and/or civic freedoms | Pollution |

Source: Elsner, M., Atkinson, G., and Zahidi, S. 2025. “Global Risks Report 2025.” World Economic Forum. January 15, 2025. www.weforum.org/publications/global-risks-report-2025/.

Moreover, in recent years there have been several instances of false political information, resulting in increased political fragmentation and erosion of trust in government and its institutions. These dynamics were at play during the 2016 US presidential elections. The heightened scrutiny Facebook (now Meta) received concerning the role of its platforms during the election prompted the company to implement its fact-checking program.2 To avoid a repeat of misinformation during the 2020 US presidential election, Facebook put in place several initiatives – this includes reducing the visibility of posts it deemed likely to incite violence, demoting content its systems predicted could be misinformation, and using its AI systems, third-party fact checkers and user reports to identify content that violated Facebook’s policies.

Although the results have not been perfect and there are still large amounts of false information being spread, social media platforms can arguably help manage its proliferation through content moderation. This includes monitoring user-generated content to ensure it adheres to rules and guidelines, removing non-compliant content and demoting potentially harmful content, making it less likely to be seen.

The societal impact of these changes is difficult to assess given the complexity; however, we expect to see an increase in risks given Meta’s decision to remove some tools it has been using to mitigate these issues. Societal risks can impact company valuation by generating regulatory, reputational and legal risks.

Regulation to Counteract Societal Risks

Governments worldwide have been looking into solutions, including regulations, to help address the issues surrounding misinformation and disinformation.3 If societal risks were to increase, the pressure on governments to act could intensify, which could expose social media companies to additional regulations, compliance costs and fines or sanctions for violations. Globally, the most important regulation is the EU Digital Services Act (DSA).4

If Meta were to expand its content moderation changes outside of the US and into the EU, it could face additional scrutiny under the DSA. Meta is already facing formal proceedings opened by the European Commission in April 2024 to determine if Facebook and Instagram breached the DSA. Focus areas include deceptive advertisements and disinformation, the visibility of political content and the compliance of Meta’s mechanism to flag illegal content.5

The DSA imposes its most stringent rules for very large online platforms (VLOPs) and very large online search engines. Facebook and Instagram are both classified as VLOPs, triggering specific rules they must comply with. These rules include obligations to mitigate against “disinformation or election manipulation, cyber violence against women, or harms to minors online”6 making platforms more accountable for their actions and any systemic risks they pose. Non-compliance can lead to fines of up to 6% of global turnover and include enforced measures to address breaches. The Commission could request the temporary suspension of services as a measure of last resort.7

The Commission has launched formal proceedings against other social media companies, including TikTok, over its alleged role in Romania’s annulled 2024 elections8 and X, over the effectiveness of its community notes system in the EU.9

Risk 2: Problems with User Experience and Brand Safety Could Lead to Bigger Financial Issues

Meta generates nearly all its revenue from advertising. In 2024, marketers listed Facebook and Instagram as their top two channels10 because of their large user networks (around 3 billion and 2 billion, respectively),11 ad targeting tools, and high engagement rates.

Content moderation has the benefit of protecting users from harmful or offensive content, leading to increased engagement and loyalty. Changes at Meta could make the user experience worse, leading to fewer users and less return for advertisers.

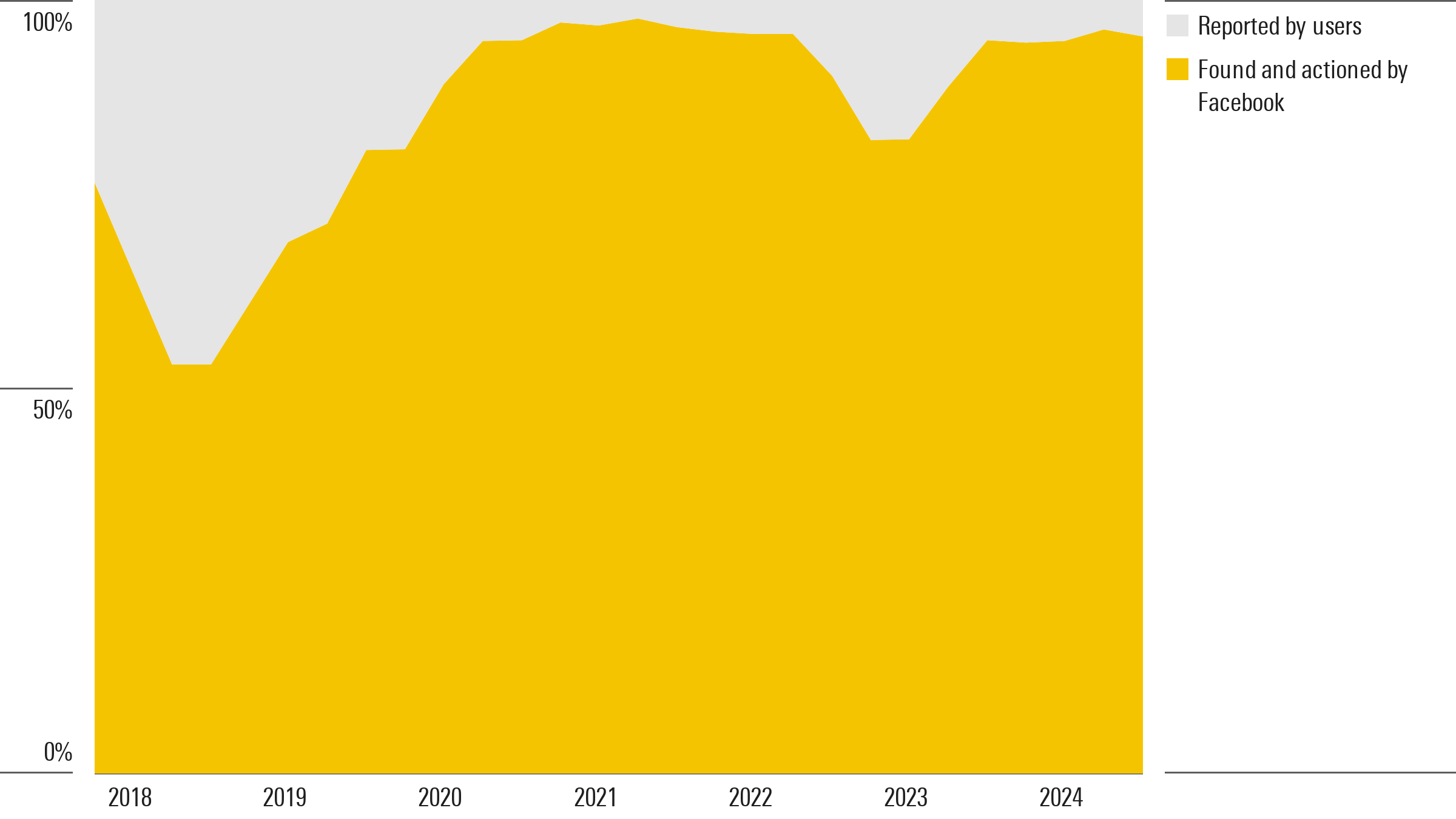

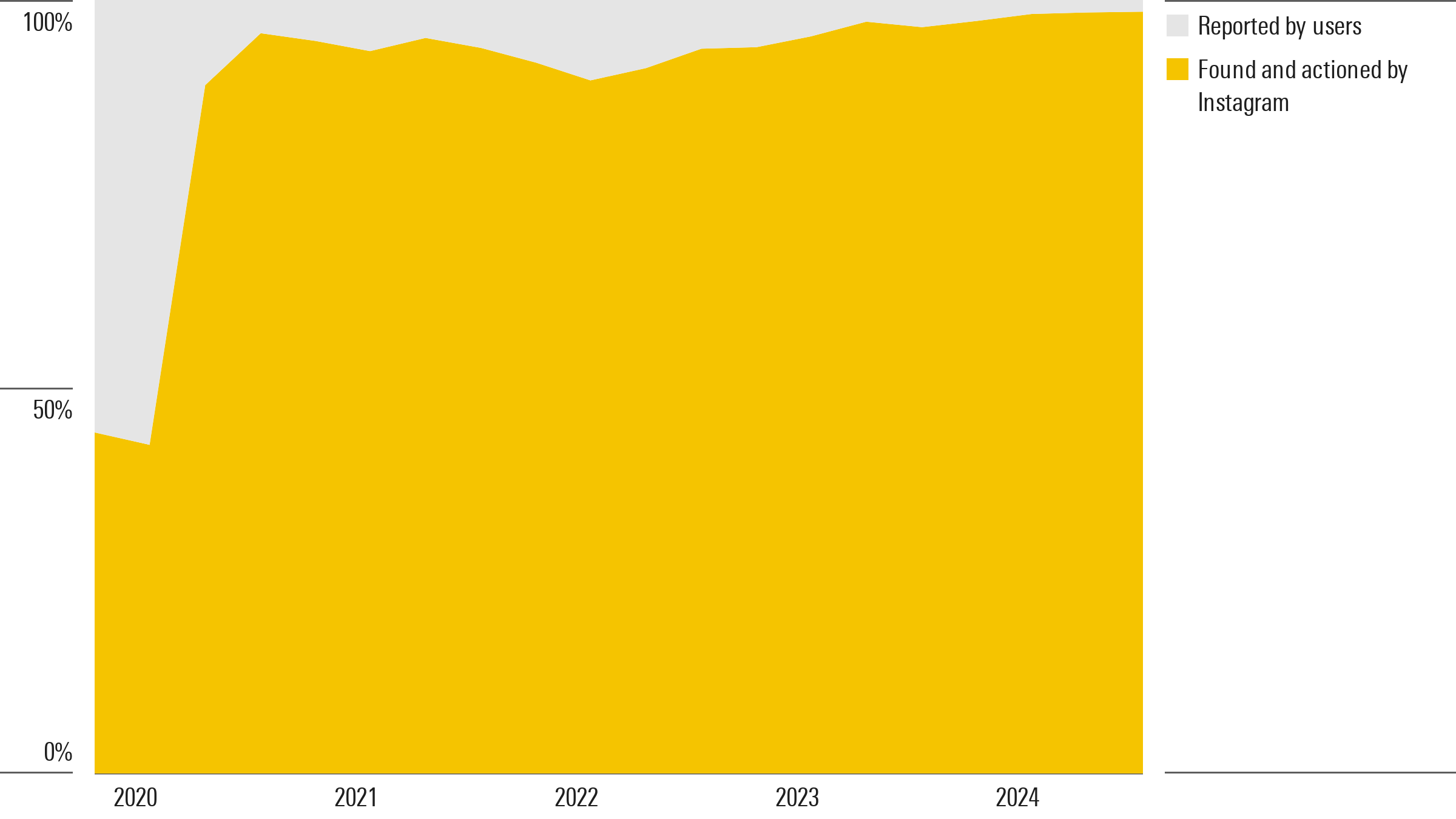

These changes could also negatively impact advertisers’ brand safety, which is an important factor when deciding where to spend valuable advertising budgets. Advertisers want to protect their brands from appearing next to inappropriate content for fear of being associated with it and harming their own reputation. As an example, Figures 1 and 2 show most of the hate speech was proactively found and actioned by Meta before users reported the content. As such, it is likely that moving to a user-generated model will lead to an increase in hate speech on its platforms.

Figure1. Facebook: Hate Speech Found and Actioned Before Being Reported by Users Versus After Being Reported

Source: Meta Platforms. “Community Standards Enforcement Report: Hate Speech. Accessed January 31, 2025.” https://transparency.meta.com/reports/community-standards-enforcement/hate-speech/facebook/.

Figure 2. Instagram: Hate Speech Found and Actioned Before Being Reported by Users Versus After Being Reported

Source: Source: Meta Platforms. “Community Standards Enforcement Report: Hate Speech. Accessed January 31, 2025.” https://transparency.meta.com/reports/community-standards-enforcement/hate-speech/facebook/.

Community Notes: Did it Work for X?

Meta is looking to replicate the community notes system in place at X. It’s notable, however, that several companies, citing brand safety concerns, paused or pulled their ads from X after their content appeared next to antisemitic, pro-Nazi, and other harmful content. Some have since resumed ad spending, however at much lower levels. A study by market research firm Kantar indicates that about 25% of advertisers plan to cut spending on X in 2025 and only 4% of the marketers surveyed believe X provides brand safety.12 Bloomberg reported that X’s revenue over the first six months of 2023 was down 40% compared to the same period in 2022.13

The Center for Countering Digital Hate reviewed the effectiveness of the community notes system in reducing the spread of misinformation and found that the consensus requirement prevents most notes from reaching users.14 The USC Viterbi Information Sciences Institute observed increases of 50% in hate speech, 30% in homophobic posts and 42% in racist posts from January 2022 to June 2023.15

While the differences between the Meta and X platforms – such as size and ad-targeting capabilities – may protect Meta from the negative impact experienced at X, the community notes approach exposes Meta to these risks.

Assessing Meta’s Product Governance Risks

Morningstar Sustainalytics’ product governance material ESG issue (MEI) captures risks related to false information, hate speech and other product safety issues. Our methodology determines exposure to MEIs at the subindustry level – in Meta’s case, the internet software and services subindustry.

According to Sustainalytics research, Meta’s exposure to product governance risks is 50% higher than the average risk level of companies that Sustainalytics tracks in the internet software and services subindustry. Our analysis reflects the disproportionately high level of misinformation that proliferates on Meta’s platforms, aided by its massive user base. This misinformation covers important topics including elections and public health issues, among others. In addition, Meta’s algorithms may reduce the diversity of posts that viewers see and amplify political polarization.

Meta’s overall ESG Risk Rating, which is the sum of a company’s unmanaged MEI risks, places it in the high-risk category. We currently rate Meta’s unmanaged product governance risks as high with a score of 7.16 These risks are likely to increase with Meta’s changes to its content moderation approach. The heightened risks could cause a move to the severe category for product governance if these changes lead to an extensive rise in the amount of false information and hate speech, which in turn trigger major regulatory risks or significant changes in user engagement and advertising spending. In Meta’s situation, a movement to 8 for its product governance MEI risk score would still result in an overall ESG Risk Rating in the high category.

It is still too early to determine conclusively the impact of these content moderation changes on the volume of false information and hate speech, as well as its impact on society, users and advertisers. Over the next year, we will continue reviewing the implications of Meta’s recent move and incorporating these within our ESG Risk assessment of the company.

References

- Elsner, M., Atkinson, G., and Zahidi, S. “Global Risks Report 2025.” World Economic Forum. January 15, 2025. www.weforum.org/publications/global-risks-report-2025/.

- “A Look at Facebook and US 2020 Elections.” Facebook. December 18, 2020. https://about.fb.com/wp-content/uploads/2020/12/US-2020-Elections-Report.pdf.

- Funke, D., & Flamini, D. “A guide to anti-misinformation actions around the world.” Poynter. Accessed January 31, 2025. https://www.poynter.org/ifcn/anti-misinformation-actions/.

- European Commission. “The enforcement framework under the Digital Services Act.” Accessed February 7, 2025. https://digital-strategy.ec.europa.eu/en/policies/dsa-enforcement.

- European Commission. “Commission opens formal proceedings against Facebook and Instagram under the Digital Services Act.” Accessed February 7, 2025. https://ec.europa.eu/commission/presscorner/detail/en/ip_24_2373.

- European Commission. 2024. “Questions and answer on the Digital Services Act.” February 22, 2024. https://ec.europa.eu/commission/presscorner/detail/en/qanda_20_2348.

- European Commission. “DSA: Very large online platforms and search engines.” Accessed February7, 2025. https://digital-strategy.ec.europa.eu/en/policies/dsa-vlops.

- European Commission. “Commission opens formal proceedings against TikTok on election risks under the Digital Services Act.” Accessed February 7, 2025. https://ec.europa.eu/commission/presscorner/detail/en/ip_24_6487.

- European Commission (2023) Commission opens formal proceedings against X under the Digital Services Act. https://ec.europa.eu/commission/presscorner/detail/en/ip_23_6709.

- HubSpot. “The state of marketing.” Accessed February 7, 2025. https://www.hubspot.com/hubfs/2024%20State%20of%20Marketing%20Report/2024-State-of-Marketing-HubSpot-CXDstudio-FINAL.pdf.

- Statista. “Most popular social networks worldwide as of April 2024, by number of monthly active users.” Accessed February 7, 2025. https://www.statista.com/statistics/272014/global-social-networks-ranked-by-number-of-users/.

- Kantar. 2024. “More marketers to pull back on X (Twitter) ad spend than ever before.” September 5, 2024. https://www.kantar.com/company-news/more-marketers-to-pull-back-on-x-ad-spend-than-ever-before.

- Counts, A. and Wagner, K. “Documents show how Musk’s X plans to become the next Venmo.” June 18, 2024. Bloomberg. https://www.bloomberg.com/news/articles/2024-06-18/documents-show-how-musk-s-x-plans-to-become-the-next-venmo?srnd=markets-vp&sref=HrWXCALa.

- Center for Countering Digital Hate. 2024. “Rated not helpful. How X‘s Community Notes system falls short on misleading election claims.” October 30, 2024. https://counterhate.com/wp-content/uploads/2024/10/CCDH.CommunityNotes.FINAL-30.10.pdf.

- Cohen, J. “A platform problem: Hate speech and bots still thriving on X.” February 12, 2025. USC Viterbi School of Engineering. https://viterbischool.usc.edu/news/2024/11/a-platform-problem-hate-speech-and-bots-still-thriving-on-x/.

- In Sustainalytics’ ESG Risk Rating universe, the high-risk category for Material ESG Issues (MEIs) includes scores from 6-8 and severe risk includes scores above 8.

a7ea5578-d27c-4f11-ae32-4294698b6d79.png?sfvrsn=558212d8_2)